I should start with the result so that you can see if it’s worth doing this:

The video shows the deposits from institutions and Subject Centres to Jorum tagged ‘ukoer’ from January 2009 to November 2011. In total over 8,000 deposits condensed into 5 minutes (there are more records, but these were the ones that could be reconciled against an institution name).

Here’s the recipe I used to do it, which should be easy to modify for your own and other repositories. As the explanation takes longer than to actually do it I’m going to assume you understand some basic tools and techniques, but you can always leave a comment if something isn’t clear.

Let start by looking at what it is we are trying to achieve. The animation is generated using code from the open source Gource project. Gource uses an input log file to visualise software commits using the format shown below. So for the Jorum visualisation we need to generate a file with timstamp, creator (in this case the submitters host institution) and title (prefixed by subject classification).

Gource log format

The user andrew adding the file src/main.cpp on Thu, 03 Jun 2010 05:39:55 GMT (1275543595):

1275543595|andrew|A|src/main.cpp

Getting the data – building a source

Building the log file we need details of the records from Jorum. Fortunately Jorum implements the OAI Protocol for Metadata Harvesting, which is designed to allow the easy sharing and access of repository data. I say easy but in reality its easy if you have another repository on a server somewhere that can consume OAI data, but its not easy to find a desktop based solution. After a lot of trial and error I’ve arrived at a solution using a combination of MS Excel and Google Refine (BTW “Google Refine is a power tool for working with messy data, cleaning it up, transforming it from one format into another” – it’s also open source).

I had hoped to do all of this in Google Refine but was struggling with the initial data import, recognising the schema and including records with multiple subject classifications, so we briefly start with MS Excel.

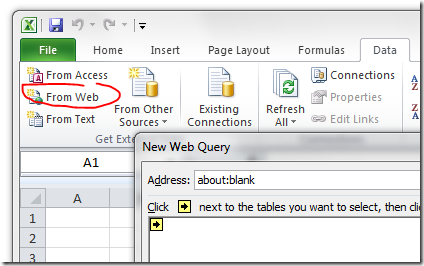

In Excel (I’m using 2010, other versions may work) we want to start a new workbook. In the Data ribbon select ‘From Web’. In the dialog that opens in the Address bar enter http://dspace.jorum.ac.uk/oai/request?verb=ListIdentifiers&metadataPrefix=oai_dc .

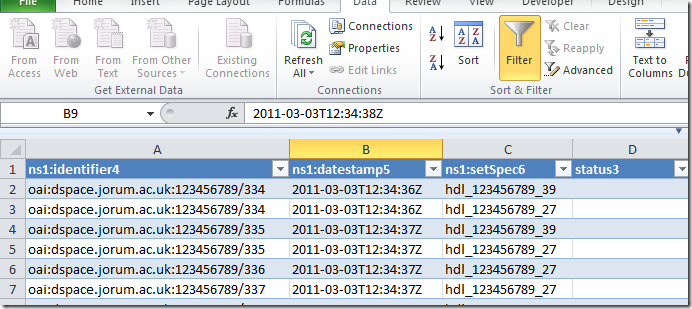

Once it’s finished loading (which can take a while) click Import. You’ll now get some dialog boxes warning you about the xml import but you can ignore those. You should now have a sheet of List Identifiers, that is a list of all the record identifiers (ns1:identifier4) and the subject set they are attached to (ns1:setSpec6status3) – you’ll find that there are more columns, mainly blank, which we don’t need.

Next we want add some readable subject classification to the data by changing setSpec ids into text equivalents. This data is also available via Jorum’s OAI service and the raw data can be seen by looking at http://dspace.jorum.ac.uk/oai/request?verb=ListSets.

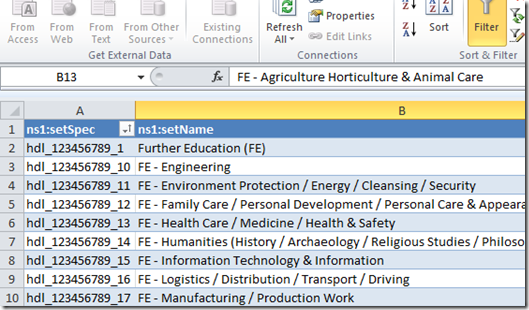

To get this data into Excel we want to follow a similar process to above in the same spreadsheet getting Data – From Web using http://dspace.jorum.ac.uk/oai/request?verb=ListSets as the address. This gives us a sheet similar to below with setSpec ids and associated category name.

Next we want to match this data to the sheet of List Identifiers. To do this we first want to sort the data we just captured on the setSpec column. Now in the original sheet add a new column and enter the following formula in the cell immediately beneath the column name (row 2):

=VLOOKUP([@[ns1:setSpec6]],ListSets!A:B,2,FALSE)

This formula looks up the setSpec6 value, matches it against the data we just got and returns a setName. You can now save this spreadsheet.

Getting more data using Google Refine

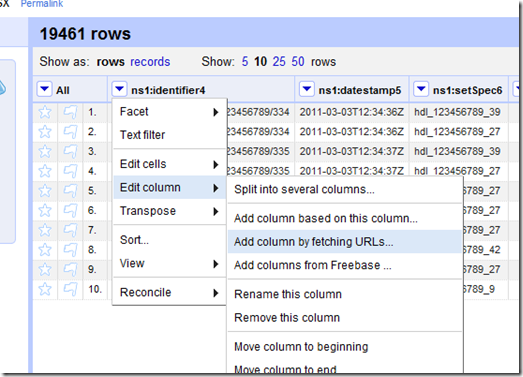

So far we’ve got a list of record ids from Jorum and the subject category for that record. We still need to get when the record was created, who by and resource title. To do this we are going to use Google Refine. If you haven’t already here’s how to install Google Refine. Open Google Refine and create a new project from the Excel file we just created. The default setting should work just make sure you select the sheet with 19,000 plus rows.

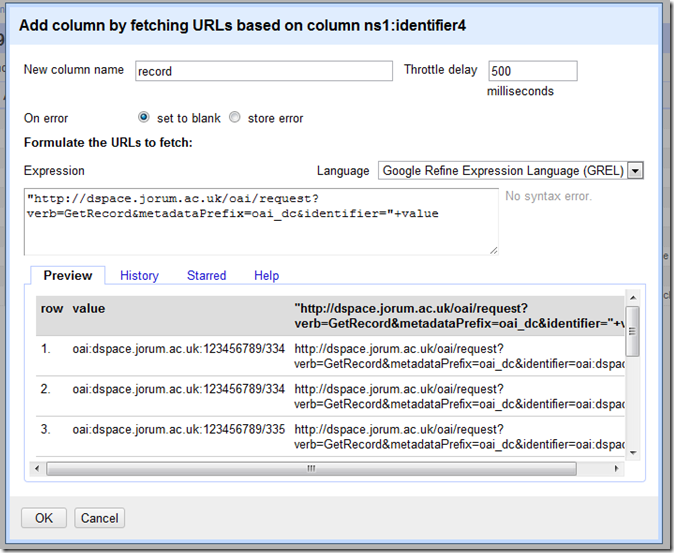

After the project has been created next we want to get more information for each record identifier. From the ns1:identifier4 column drop-down menu select Edit column > Add column by fetching URLSs:

In the dialog box that opens use the following settings:

- New column name – record

- Throttle delay – 500

- On error – set to blank

- Language – GREL

- Expression –

"http://dspace.jorum.ac.uk/oai/request?verb=GetRecord&metadataPrefix=oai_dc&identifier="+value

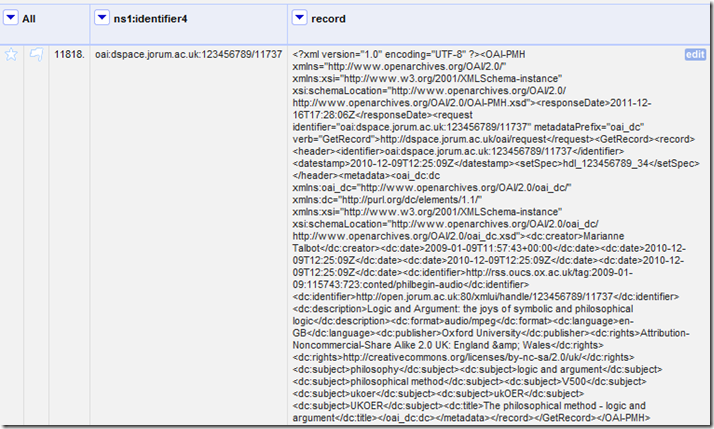

When you hit OK is Google Refine will use the row value to fetch even more data from Jorum and enter it into a cell. This is done using another entry point to OAI services using each identifier to get all the record data (here’s an example response). As this has to process over 19,000 requests it can take some time. If you would prefer not to wait here’s an export of my Refine project with the data already collected.

So now we have all the information we need but it’s all in one cell, so we need to do a bit more refining.

Extracting a date

You’ll notice that each record has a couple of dates stored in dc:date. Lets look at extracting the first date we find. Google Refine has a couple of ways to parse a cell and get data out. Initially I tried using Jython but didn’t get very far, but thanks to some help from the Google Refine community found I could use Refine’s GREL language. Here’s how.

From the new ‘record’ column dropdown select Edit column > Add column > Add column based on this column. In the dialog that opens set the new column name as first date and enter the following GREL expression:forEach(value.parseHtml().select("dc|date"),v,if(length(v.htmlText())>11,v.htmlText().slice(0,10)+" "+v.htmlText().slice(11,19),"bad format")).sort()[0]

What is happening here is within the cell forEach <dc:date> If the result is length than 11 characters slice the text for the first 10 characters (yyyy-mm-dd) and a space then slice characters 11 to 19 (hh:mm:ss). As the dc:dates are temporarily stored in an array we sort this and get the first ([0]) value, which should be the smallest.

Next we want to turn the date, which is being stored as a string, into a UNIX timestamp (the number of seconds or milliseconds since midnight on January 1, 1970). We need a timestamp as this is the date/time format used by Gource.

To get this we want to add a column based on firstDate. In the Add column based on column firstDate enter the name timestamp and switch the language to Jython (I found this the best for this procedure) and the expression:

import time

return int(time.mktime(time.strptime(value, '%Y-%m-%d %H:%M:%S')))This takes the cell value and turns it into a Jython time object by matching the date/time pattern used in the firstDate column. As Jython times are stored as UNIX timestamps we can just return the value to the new cell.

Some basic timestamp validation

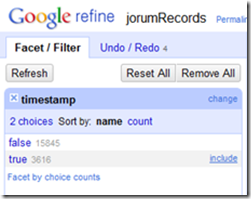

Initial visual inspection of the results show that the status column contains a lot of records marked deleted. From The status column dropdown we can create an addition Facet > Text Facet. In the Facet/Filter section we can see that there are 3616 occurrences of the text ‘delete’, so we can conclude that blank timestamps are because of deleted records, which we can live with.

Important tip: As we have filtered the data if we do any additional column operations it will only be applied to the filtered rows so before moving on remove these facets by click on the little ‘x’ next to them.

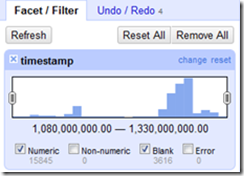

Next lets sort the timestamps to check they are in a reasonable range. Do this by clicking the dropdown on timestamp ad using the sort option, sorting the cells as numbers (check both ascending and descending order). You’ll notice some of the dates are in 2004, I’m happy with these as Jorum has been going for some time now.

[By turning on the numeric facet for the timestamp column we also get a little histogram which is handy for filtering rows].

Before moving on make sure timestamp is sorted smallest first

So we now have a timestamp next lets extract the resource title.

Extracting a resource title

This is relatively straight forward as each record has a. So from the record column drop down select Edit column > Add column > Add column based on this column. In the dialog box use GREL, name the new column ‘title’ and use the following expression

value.parseHtml().select("dc|title")[0].htmlText()

[Each record only has one <dc:title> so it’s safe to just return the first title we find]

Reconciling who ‘damn’ made these resources

The headache comes from resource creators filling in information about their submission including information about who made it. This means that there are inconsistencies with how the data is entered, some records using a separated creator for the institution name, others including it with their name, or omitting this data altogether. For the visualisation I wanted to resolve the resource against an institutional name rather than an individual or department. Here’s how the data was reconciled.

Lets start by extracting all the records to let use see what we are dealing with. We can do this by again using Edit column > Add column > Add column based on this column from the ‘record’ column. This time lets call the new column ‘creators’ and use the following GREL expression:

forEach(value.parseHtml().select("dc|creator"),v,v.htmlText()).join(",")

This will forEach get the value and store as a comma separated string.

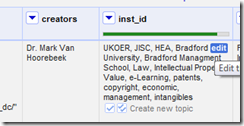

For the early records you’ll notice that it’s a named person and there is little we can do to reconcile the record against an institution. For the later records you’ll see named people and an institutional affiliation. So lets see if we can extract these institutions into their own column.

From the creators column dropdown add a column based on this one calling it inst_id and using the following GREL expression

if(contains(value.toLowercase(),"university"),filter(value.toLowercase().split(/[-,.;\(\)]|(\s+for+\s)+/),v,contains(v,"university"))[0].trim(),if(contains(value.toLowercase(),"centre"),value.toLowercase(),""))

What this expression is doing is if the value contains the word ‘university’ the string is split into an array using the symbols –,.;() or the word ‘for’ and the array value with ‘university’ is stored, else if the value contains the word centre this value is stored (the OER Programme has projects from Universities and HEA Subject Centres).

Some additional refining via faceted filters and edit cells

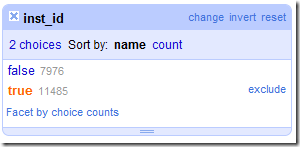

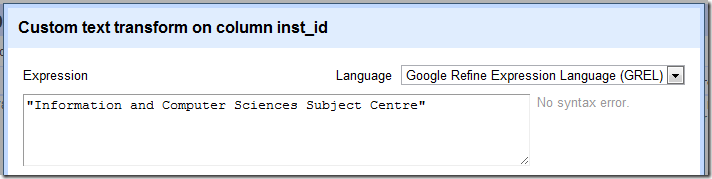

Scrolling through the records we can see some records the a creator that begins with ‘UKOER,Open Educational Repository in Support of Computer Science’. On the creators column from the dropdown sect ‘Text filter’ and use ‘Open Educational Repository in Support of Computer Science’. With this facet in place we can see there are 669 records. As we are confident these files were submitted as part of the Information and Computer Sciences Subject Centre’s work we can autofill the inst_id column with this data by clicking the dropdown on the inst_id column and selecting Edit cells > Transform. In the expression box enter “Information and Computer Sciences Subject Centre” and click OK.

Remove the ‘creators’ filter by clicking the small ‘x’ in the top left of the box.

Let add a new text filter to the records column (you should know how to do this by now) with the word ‘university’. This should filter 878 rows or so. To make it easier to see what it is matching press Ctrl+F to bring up you browser Find on page and look for university.

Moving through the data you’ll see things like:

- 384 rows can have inst_id’s by using the cell transformation

filter(cells["record"].value.parseHtml().select("dc|publisher"),v,contains(v.htmlText().toLowercase(),"university"))[0].htmlText() - 89 rows include the term “University of Plymouth” in the dc:description, we can filter and fill these using the subject centre method.

- 81 rows can have university names pulled from dc:subject using

filter(cells["record"].value.parseHtml().select("dc|subject"),v,contains(v.htmlText().toLowercase(),"university"))[0].htmlText()

At this point if we just use the blank inst_id facet we’ve got 10,262 true (ie blank inst_id’s) and 9199 false, so a 47% hit rate … not great! But if we add a ‘ukoer’ text filter to the records column this improves to 8433 inst_id’s in 9955 matching rows which is a 84% hit rate. Whilst this isn’t perfect it’s probably the best we can do with this data. Next to turn those institutional id guesses into reusable data.

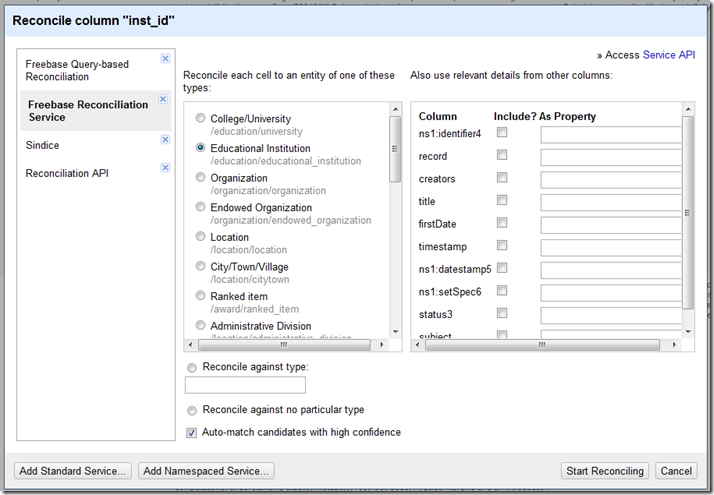

The real magic reconciling institutional names against CETIS PROD

So far we’ve tried to extract an institutional origin from various parts of the Jorum data and there is a lot of variation in how those ids are represented. For example, the inst_id column might have ‘the university of nottingham’, ‘university of nottingham’ or even ‘nottingham university’. To make further analysis of the data easier we want to match these variations against a common identifier, in the example above the ‘University of Nottingham’.

Google Refine has some very powerful reconciliation tools to help us do it. More information on Google Refine Reconciliation here.

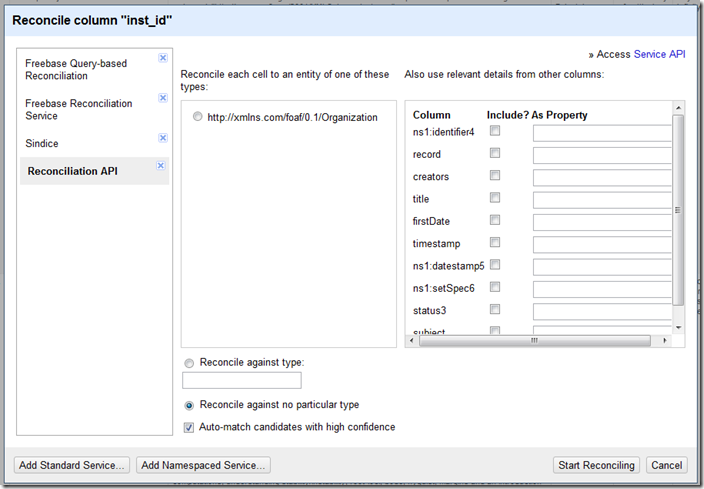

In the inst_id column select Reconcile > Start reconciling.

Google Refine has existing Freebase databases, which we could use to match institutional names against database ids, but as we are dealing with JISC/HEA projects it makes more sense to try and reconcile the data against the CETIS PROD database (this opens up further analysis down the line).

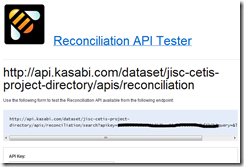

http://api.kasabi.com/dataset/jisc-cetis-project-directory/apis/reconciliation/search?apikey=aaaaaaaaaaaaakkkkkkkkkkeeeeeyyy

Back in the Google Refine Reconciliation dialog box click on ‘Add Standard Service …’ and enter the url you just created. Once added click on the new Reconciliation API and select ‘Reconcile against no particular type, then Start Reconciling’.

Exporting to Gource using a custom template

Almost there people ;). At the very beginning I mentioned that the visualisation tool Gource has it’s own input log formats, shown below as a refresher:

1275543595|andrew|A|src/main.cpp

Another useful feature of Google Refine is Export Templating, which allows us to control how our data can be written out to a separate file.

In Google Refine make sure you have a text facet on the record column filtering for ‘ukoer’ and inst_id: judgement is on ‘matched’ (this means when we export it just include this data). Now select Export > Templating …. Remove any text in Prefix, Row Separator and Suffix and in Row Template use:{{cells["timestamp"].value}}|{{cells["inst_id"].recon.match.name}}|A|{{replace(cells["subject"].value," / ","/")}}/{{if(length(cells["title"].value)>20,cells["title"].value.slice(0,20)+"...",cells["title"].value)}}

This will write the timestamp cell value, then the reconciled name for the inst_id, then the subject value (stripping whitespace between slashes) and the resource title stripped down to 20 characters.

Finally, Gource

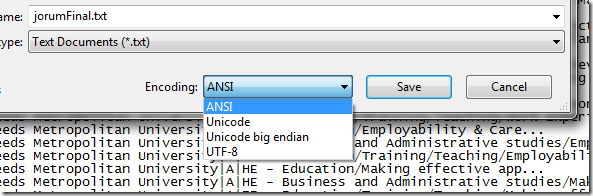

Google Refine will spit out a .txt file with the formatted data. Before we use it with Gource there is one thing we need to do. Initially I was getting log file format errors in Gource and then discovered it was a .txt file encoding problem. So open your newly created .txt file (which is in UTF-8 format) and File > Save As changing the encoding to ANSI.

To test you visualisation download gource and extract the files. In the same directory as your extracted files place a copy of your refined log file. To view what you’ve got open your command line, navigate to your extracted gource location and executing:gource nameoflogfile.txt

The gource site has more instructions on recording videos.

– THE END –

well almost … Here’s:

- the final Google Refine project files;

- my generated log file; and

- export of reconciled data in a Google Spreadsheet (here’s a better version with more metadata extracted and filtered for ukoer)

The bigger picture

This work was undertaken as part of my OER Visualisation work (day 11) and while it’s useful to have the Jorum OER snowflake visualisation in the bag, having a refined data source opens up more opportunities to explore and present OER activity in other ways. For example, I immediate have a decent sized dataset of OER records with subject classification. I’ve also matched records against PROD data which means I can further reconcile against project names, locations etc.

Yummy data!

Jenny Delasalle

’tis a thing of beauty!

Jackie

Hi Martin – was delighted to see this visualisation. Saw your tweet last week about having ‘Jorum on a stick’, and now to see what you have been able to do with the data extracted via OAI-PMH in such a short space of time is great.

It poses a number of questions (e.g. as a visualisation it’s limited as actually I want to interact with it) but there are some clear signals coming through regarding which institutions are engaging with OER via Jorum. Are there any tools available that would enable us to ‘data brush’ so that we could query the results of the visualisation. I’d like to know, for example, whether there are easy to spot patterns in deposits over time, or whether we can rank institutions/communities according to content deposited. I’m currently involved in an OU run project searching for good OER content and the findability factor that Jorum supports is one of its strengths.

I’ll also be interested to know whether others take your recipe and apply it to their own respoitories – so thanks for sharing that.

And lastly – did you have other ideas about what you could do with the data you had from Jorum? If so please share with us.

Martin Hawksey

Hi Jackie – still working on how best to present the data in an interactive and engaging way, but in terms of a quick data brush there’s a lot you can do with spreadsheets once you’ve learned some tricks. Here’s the some of the raw data used in the snowflake visualisation in a Google Spreadsheet (all the reconciled records are there I just couldn’t upload all the columns). If you File > Make a copy of this you’ll be able to see some of the formula being used. Here’s a summary of the sheets

jorumRecords – raw data which can be filtered/sorted using header drop-downs

Summary – using UNIQUE formula making lists of number of records per institution and subject category

DepositSubjectCount – lists institutions and breaking 2009-2010 into quarters to count accumulation of deposits and subjects (data is used in motion chart in sheet DepositSubjectCountChart)

DepositDistribution – monthly breakdown between 2009-2010 of ukoer deposits to jorum for each submitting institution and detecting the top depositor each month (data is used in time line chart on sheet DepositDistributionChart

viewCountPivot – Pivot Table summing the view counts (as of yesterday) for ukoer resources

In terms of methods for spotting patterns, the headache is refining the data each time to work out which institution is submitting but once you have this it could just be a case of inserting the new data into the first sheet which would update all the other sheets. Ranking is an interesting one. Currently I’m torn between treating the ukoer community as a whole because as soon as you start ranking you create dysfunctional behaviour and providing the data as a means of feedback to let institutions know how they compare.

As for ideas, watch this space 😉

Martin

OER Visualisation Project: Exploring UKOER/JORUM | OLnet | Scoop.it

[…] This work was undertaken as part of OER Visualisation work and while it’s useful to have the Jorum OER snowflake […]

Simon Bains

Fascinating. I can see real value in this method as a powerful representation of take up of a repository. As we implement a policy in support of green OA at Manchester in 2012 it would be a valuable visual tool to demonstrate the response of our academic community in terms of full text deposits. It reminds me of some of the outputs of the Mosaic project (some of which were interactive): http://www.sero.co.uk/jisc-mosaic-results.html

OER Visualisation Project: Data Driven Journalism [day 16] #ukoer – MASHe

[…] #ooher.By my calculation it’s day 16 of the OER Visualisation Project. Since day 11 and the Jorum UKOER ‘snowflake’ visualisation I’ve been going back to the refined data and trying to validate the set and understand the […]

JISC OER Rapid Innovation: Technical roundup [start] – JISC CETIS MASHe

[…] into the UK Learning Registry node setup as part of the JLeRN Experiment.Having dabbled with using Google Refine to get Jorum UKOER records into a different shape I wonder if the project will go down this route, or given the number and existing shape manually […]

OER Visualisation Project: Maps, Maps, Maps, Maps [day 20] JISC CETIS MASHe

[…] […]

Summary of social monitoring tools and recipes I use at JISC CETIS JISC CETIS MASHe

[…] More info: http://mashe.hawksey.info/2011/12/google-refining-jorum-ukoer/ […]

Visualising Open Source Projects | OSS Watch team blog

[…] least there is also often an explicit link between a Jira ticket and related commits. Given that Martin Hawksey has shown you can use Gource for visualising OER deposits into Jorum using Google Refine I’m sure its […]