I keep getting this nagging voice in my head to do something with Google+ and Google Spreadsheets. I’ve resisted until now as I don’t think there is enough of an official API there to satisfy my current interest in social network analysis. This hasn’t stopped other people from getting off the beaten track and developing some really useful stuff.

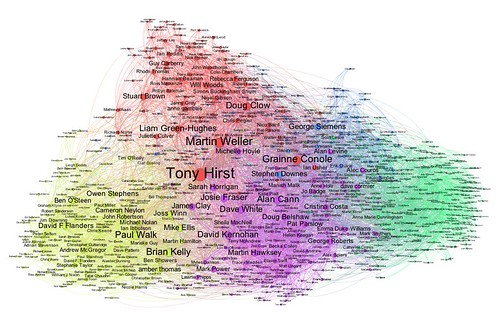

A while ago Tony (Hirst) posted a Fragment: Looking up Who’s in Whose Google+ Circles…,which highlights how friend and follower information can be extracted from Google using an undocumented API. Tony followed this up with So Where Am I Socially Situated on Google+? in which he used a genius bit of Python scripting to extract a large fragment of his extended Google+ network which he then processed in Gephi.

A while ago Tony (Hirst) posted a Fragment: Looking up Who’s in Whose Google+ Circles…,which highlights how friend and follower information can be extracted from Google using an undocumented API. Tony followed this up with So Where Am I Socially Situated on Google+? in which he used a genius bit of Python scripting to extract a large fragment of his extended Google+ network which he then processed in Gephi.

Here’s my take on the problem, a Google Spreadsheet template to extract Google Plus network information for analysis in the tool of your choice, in my case NodeXL.

If you want to go and play straight away here’s a link to:

*** Get Google Plus Friend/Follower Information ***

(File > Make a copy to use yourself)

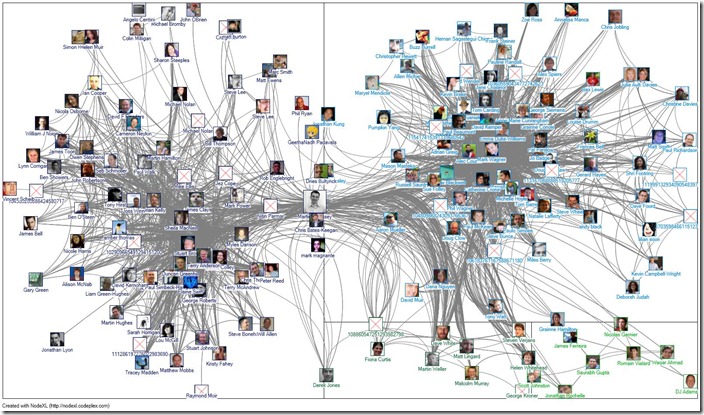

Below is an example output from my network generated in NodeXL (and a copy in my online NodeXL viewer ;):

How to use

Instructions for use are contained in the Spreadsheet Readme sheet. My top tip is be patient, it can take a while to grab the data so don’t be alarmed if a minute goes by without a notification from the sub-level run. If nothing is happening after 5 minutes something has gone wrong to try running ‘Google+ > Start/continue collection’ again (it should continue from where it left off). Also this script is hot off the press so if you are having problems comments are very welcome.

One other very important caveat is looking at the execute logs the script isn’t getting friend data for everyone. Not entirely sure why but might be Google trying to prevent use of an undocumented API. This means some nodes (in one sample 10%) are lacking outbound data.

Getting data into NodeXL

I’m not familiar with getting the data into tools like Gephi (other than via a NodeXL export) so if someone wants to post or link to something to do this for other tools leave a comment or get in touch. Instead here are some basic instructions for getting the data into NodeXL:

- Once you collected the data from the Google Spreadsheet select File > Download As > Excel

- Open the downloaded file in Excel making sure you the Links sheet is active

- Start a new NodeXL template and from the NodeXL ribbon menu select Import form Open Workbook

- Make sure the dialog is pulling data from the Links sheet and ‘Columns have headers’ is not ticked

- Make sure Type is set to Directed and click Prepare Data > Get Vertices from Edge List

- Back in the Excel spreadsheet with your downloaded data right-click on the Nodes tab and select ‘Move or Copy…’ and change the To book dropdown to your new NodeXL template

- Back in your NodeXL template open the Vertices sheet and add two ‘Other columns’ with the headings ‘Name’ and ‘ImageUrl’

- In the cell beneath the new Name column enter the formula

=IF(VLOOKUP([@Vertex],Nodes!A:C,2,FALSE)=0,[@Vertex],VLOOKUP([@Vertex],Nodes!A:C,2,FALSE)) - In the cell beneath the new ImageUrl column enter the formula

=VLOOKUP([@Vertex],Nodes!A:C,3,FALSE) - In the cell beneath the Visual Properties Image File column you can enter the formula

=[@ImageUrl]then fill the Shape column with Image (you may of course decide not to use images for your nodes, it’s up to you) - Do your other analysis: graph metrics, groups, autofill etc

Some technical notes on using Google Apps Script to collect the data

Processing time – using ScriptProperties as a cookie

Using Google Spreadsheet/Google Apps Script I can’t the same extent of information (where Tony got a sample of who his friends were also following I’m just getting which of my friends follow each other). This is because Apps Scripts are executed server-side and automatically timeout after around five minutes. Even with a more focused data set in mind I was finding I could processed all the data in 5 minutes so had to resort to breaking the process into chucks using the build-in ScriptProperties (it’s like a script cookie) to store where the process was up to.

Caching in the Cloud with Google Apps Script

Google recently announced the addition of a CacheService in Google Apps Script. In Tony’s original code he was also caching responses from APIs to prevent multiple lookups. In my version there is less need for caching as I only make one grab for each friend or follower. I still implemented the CacheService as a way to backup a sublevel processing run. The scenario being you get most of the way through the 50 calls per block when the script fails, the next time it can just re-read the data it already got from the cache speeding things up. Doing this I did however find the limits of the CacheService:

- cache names have a size limit (Solution: I trimmed mine to 240 characters),

- cache size looks like it might have a 100k limit (Solution: caching only the data I needed and wrapping put in a try/catch exception – if I couldn’t put it I’d just make a fresh call if I needed the data)

- anything cached is stored as a string (Solution: some array splitting and joining in the right places)

Below is how the function for this ended up looking (I’m I’m sure it can be refined – I’m just a hobbyist coder). The full code is here.

function getCachedDataOids(url) {

var cache = CacheService.getPublicCache(); // initialize

var oids = cache.get("f"+url.substr(0,240)); // get data if any (url trimmed to prevent error)

if (oids == null || oids == "") { // if null or empty means there is no cached data or last fetch failed

var requestData = {"method":"GET", "headers": { "User-Agent": "http://docs.google.com"}}; // prepare request

var response = UrlFetchApp.fetch(url, requestData); // try fetch

if (response.getResponseCode() == 200) { // if response then

var resp = response.getContentText(); // get response text

var reobg = new RegExp("\\d{21}","g"); // only interested in Google Plus user ID so prepare regexp

oids = resp.match(reobg); // get an array of ids

if (!oids == null){ // if something stringify it

oids = oids.join(",");

}

try {

oids = oids.join(",");

cache.put("f"+url.substr(0,240), oids, defCache); // try and put it in cache

} catch(e){

Logger.log(e+url)

return oids; // if too big just return it

}

}

}

return oids;

}Enjoy 😉

Using Google Apps Script to extract Google+ friend/follower information for analysis in NodeXL, Gephi – MASHe | Google Apps Script | Scoop.it

[…] Using Google Apps Script to extract Google+ friend/follower information for analysis in NodeXL, Gep… A while ago Tony (Hirst) posted a Fragment: Looking up Who’s in Whose Google+ Circles…,which highlights how friend and follower information can be extracted from Google using an undocumented API. Tony followed this up with So Where Am I Socially Situated on Google+? in which he used a genius bit of Python scripting to extract a large fragment of his extended Google+ network which he then processed in Gephi. […]

rams

I am trying to run the script.. i am getting the below error. Any suggestions.

TypeError: Cannot call method “split” of null. (line 67)

Martin Hawksey

@rams not entirely sure why that is happening. What is the Google Plus ID you’re trying to get data for?

OER Visualisation Project: Processing a resource feed to find frequency using Google Spreadsheets [day 3] #ukoer #ooher – MASHe

[…] getting the Pipe as JSON. JSON input powers a lot of my other Google Spreadsheet toys like TAGS and Google+ network generator so I’ve a growing bank of code snippets I can throw together.Here’s my ‘Frankenstein’ code […]

Tony Hirst

If you publish a spreadsheet with actual data in it, I’ll have a go at publishing a programmatic spreadsheet to gexf recipe…

Tony Hirst

doh – realised you have posted a ss id… apols…

Tony Hirst

So does this work? https://scraperwiki.com/views/googleplus_net_spreadsheet_grapher/ (look at the view and save it as a .gexf file)

Martin Hawksey

Brilliant! I’ve made some tweaks and posted back in the comments http://blog.ouseful.info/2012/04/12/appropriating-scraperwiki-views-for-data-format-transformation-google-spreadsheet-csv-to-gexf/#comment-21509

Appropriating Scraperwiki Views for Data Format Transformation – Google Spreadsheet CSV to GEXF « OUseful.Info, the blog…

[…] for example, in the post Using Google Spreadsheets to extract Google+ friend/follower information for analysis in NodeXL, Gep…, Martin Hawksey describes a way of scraping Google+ friends data into a Google spreadsheet, along […]

Tunyo

I’m trying to run the script, but upon initialising the collection, I always get the following error message:

‘Script start experienced an error’

Any idea about what goes wrong?

Martin Hawksey

This recipe is so old I’m guessing it’s broken because there is now an official Google+ API