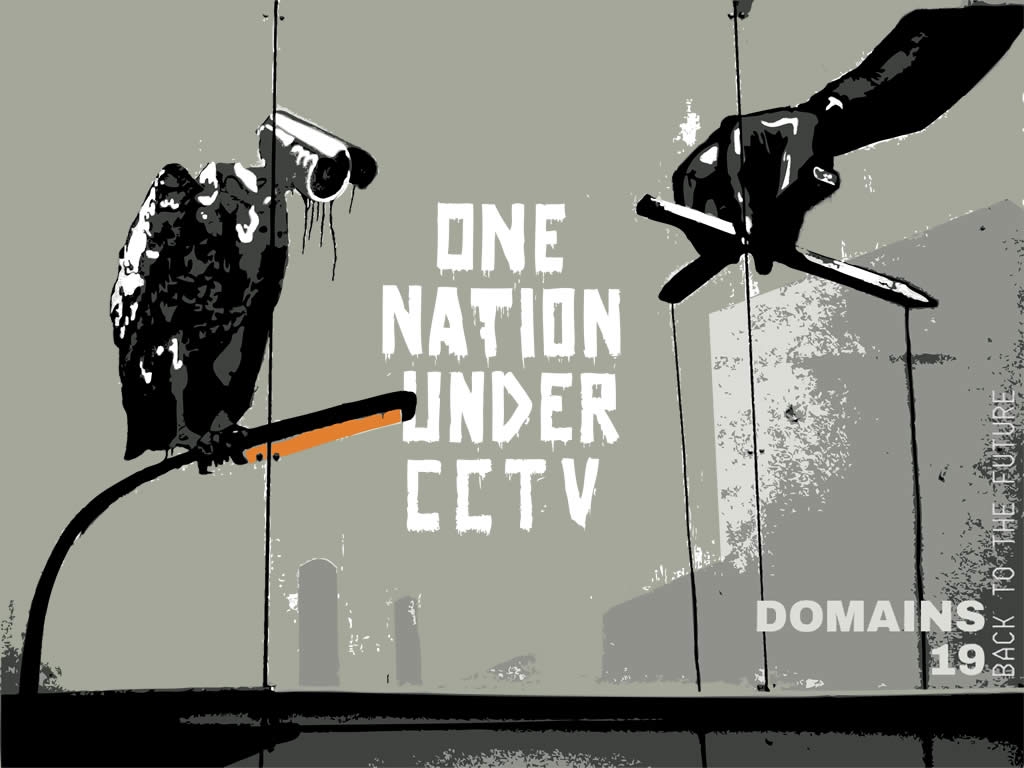

Late last year I was invited to speak at Reclaim Hostings #Domains19 conference following a chance conversation with Jim Groom (#BigFan). We were talking about one of the #Domains19 themes ‘privacy and surveillance’. This post is designed to capture some of the context and ideas around my subsequent ‘Minority Report – One Nation Under CCTV’ talk. As part of this I’ll share some demos I’ve made for Domains. The talk and demos are designed to put a spotlight on face detection and recognition solutions, in particular, how quickly and easily it has become to use this technology often at the cost of our privacy.

As the creator of TAGS privacy and surveillance often sit at the back on my mind. From the beginning TAGS was designed to help show people the amount of data we personally share and how easy it is for anyone to access. We all know that technology is not neutral and whilst there is a long list of people using TAGS for positive purposes by its nature there are some who turn to the darkside.

In the case of face detection and recognition there are similar issues, however, one of the biggest differences is where we ‘choose’ to share data on social media in the case of face detection and recognition we can be surveilled often without knowledge and increasingly without choice. Currently we are at a point where legislation is still catching up although there are promising signs from places like the state of Illinois and the city of San Francisco we are in a wild west frontier where it appears anything goes.

There are uses of face recognition I can personally accept, for example, I can completely understand it’s use for border control. In the case of law enforcement – arguably the origins of technology initially from the original work of Sir Francis Galton in the 1800s on face description and laterally on the 1960s of the work by Woody Bledsoe along with Helen Chan and Charles Bisson on the first implementation of automatic facial recognition of police mugshots – I’m concerned that our human rights are being eroded.

Let’s first look at how the ‘face race’ has taken off in the last couple of years. Historically state sponsorship has been at the heart of face recognition development. It’s been documented that Bledsoe and colleagues faced restrictions on reporting their work because their work was funded by an unnamed intelligence agency. This continues and more recently China has lit a new rocket fueled by their ‘social credit’ scheme used to monitor individuals at unprecedented levels, to the point of how much toilet roll you are allowed in public toilets.

One of the excelerants has been access to data. In the 60s the data available to develop face recognition systems was limited. Bledsoe started with 2,000 mugshots, now there are datasets with 100 million photos and more. But where does all this data come from? Ultimately it comes from us and us if you like it or not. If you’ve ever walked down Oxford town centre between late November or early December 2007 it’s potentially in a dataset, if you were on Duke University campus in March 2014 it’s potentially in a dataset, if you’ve ever shared an image on the internet it’s potentially in a dataset.

It’s worth repeating the last one …. ‘if you’ve ever shared an image on the internet it’s potentially in a dataset’!!! At this point to might think I referring to the highly publicized news story of 100 million Creative Commons licensed images from Flickr being used by IBM to improve their face detection/recognition algorithm. Whilst it’s true the dataset used by IBM were CC licensed this is a mute point. Even if these photos had a traditional copyright licence ‘fair use’ would have allowed IBM to data mine your photos without requiring any permission from you first. To illustrate this did you ever give Google or any other search engine permission to index images associated with your name:

So your data is being used without consent to make these systems. What about how this technology is being used. Let’s look at the UK’s Metropolitan Police’s facial recognition trails as an example of how this technology is potentially being abused. To give the Met credit they are being transparent about their use of this technology on one of their advice and information pages. But when you look in more detail in the section on ‘how the law lets [them] use facial recognition’ linking to the entire Human Rights Act, the entire Freedom of Information Act, the entire Protection of Freedoms Act, and the entire Data Protection Act gives me little comfort (it also appears that they’ve forgotten to link to the entire GDPR…).

Like most members of the public I don’t have the expertise to say under what lawful basis the Met are using facial recognition which is why having judicial and regulatory bodies are crucial in protecting our individual rights. In the case of the Met trial they state three: the Information Commissioner’s Office (ICO), the Surveillance Camera Commissioner (SCC), and the Biometrics Commissioner (BC). My first concern is my understanding is that while the ICO have some power the Biometrics Commissioner appears to be limited to fingerprints and DNA and the Surveillance Camera Commissioner ‘has no enforcement or inspection powers’. As part of the first major legal challenge to automated facial recognition surveillance, the BBC has reported that the ICO’s own lawyers have said “facial recognition technology used by a police force lacks sufficient legal framework”.

Another concern is how facial recognition is being used to erode our freedom of expression and freedom of privacy. Already several countries have legislation against the full covering of faces in public. Whilst often headlined as ‘burka bans’ this legislation actively prevents all of us from opting out of facial recognition, for example, the French ‘Loi interdisant la dissimulation du visage dans l’espace public’ is ‘The Act prohibiting concealment of the face in public space’ which is a:

“ban on the wearing of face-covering headgear, including masks, helmets, balaclavas, niqābs and other veils covering the face in public places, except under specified circumstances”. Source: Wikipedia

Even without such legislation in place yet in the UK the police are finding ways to penalise those who don’t wish to have their face scanned as shown in the clip here.

At this point it’s probably worth highlight that this technology is not just about recognising a person. Many of the ‘face as a service’ solutions provide detailed demographic data. This is where marketing executives start getting very excited. Why have digital signage with generic advertising when you can display information targeted based on the person’s age, gender, race and whether you are wearing glasses, a hat or even if you just look happy or sad.

Before I’m accused of inventing the next wave of evil (again) these aren’t new ideas. Just like John Anderson walking through the shopping mall in Spielberg’s Minority Report or someone standing in Walgreens in front of a ‘Cooler Screens’ display your face has become a commodity, your face has become your store loyalty card.

Don’t believe me? This is where the Domains19 demos come in. I’ve already written about McFlyify which is a Google Cloud Vision experiment to illustrate face detection. As part of this demo the user gets to see some of the data Google returns for a picture of your face. As well as the data points often used to fingerprint your face, Google’s Vision API also returns the probability of emotions and sentiment.

To illustrate this type of data I’ve created ‘Domain Invaders’. This is another Google Vision experiment that detects faces in an image and overlays a Space Invader alien to highlight your level of detected happiness (green likely to be happy, red if not). Like McFlyify it seemed rude not to have a site that lets you do this as an animated gif.

‘Domain Invaders’ is my light hearted take on already proposed lecture surveillance systems. One of the scary things is ‘Domain Invaders’ took me about 4 hours to code and Google give you 1,000 free image requests per month and after that only costs $0.0015 per image.

The final piece I produced was inspired by a conversation with Jim. When explaining the capabilities of some of the facial recognition system I described it as the scene from Minority Report but instead of iris scanning your face would be used to target advertising (I also highlighted that John could have saved a lot of trouble if he just wore sunglasses … but I guess these are outlawed in 2054). I went on to explain to Jim that actually a number of facial recognition services can detect sunglasses. Jim being Jim the conversation naturally led on to John Carpenter’s 1988 action horror sci-fi thriller ‘They Live’ in which:

Nada, a down-on-his-luck construction worker, discovers a pair of special sunglasses. Wearing them, he is able to see the world as it really is: people being bombarded by media and government with messages like “Stay Asleep”, “No Imagination”, “Submit to Authority”. Even scarier is that he is able to see that some usually normal-looking people are in fact ugly aliens in charge of the massive campaign to keep humans subdued. – Melissa Portell IMDb

The ‘They Live’ generator is a Kairos experiment that lets you relive the seminal moment when Nada walks down the L.A street and the real truth is revealed. As well as letting people see how reliable Kairos is at detecting if you are wearing sunglasses the experiment also lets you see the other demographic data returned including age, gender and ethnicity … and again because it’s Domains letting you make an animated gif:

[The reason I chose to use Kairos instead of one of the other services that can return this type of data is publicly they have stated that they won’t make their service available for government surveillance.]

If you are interested in my full Domains19 talk here is the slide deck (not CCing this one as there is a lot of ‘fair use’ content but you are more than welcome to re-purpose any of it), and a recording is on YouTube.

Hopefully more on my Domains19 experience to follow hopefully including how I VJ’d my talk (recording available soon).